Yingjia Wan

(I also go by ‘Alisa’ a lot.)

About

I am Yingjia Wan, a first year PhD student at UCLA, advised by Prof. Elisa Kreiss in the Computation and Language for Society (Coalas) Lab and the UCLA NLP group. My research interests include but are not limited to large language model reasoning, evaluation, AI4MATH, and post-training alignment.

- Education: I obtained my master’s degree at the Language Technology lab, University of Cambridge in 2023. I received my bachelor’s degree from University of Macau, where I graduated as the valedictorian of Class 2021.

- Collaboration: Working on AI/NLP since 2023, I feel incredibly grateful to have learned from some outstanding AI researchers and mentors such as: Prof. Elisa Kreiss, Prof. Zhijiang Guo, Dr. Ivan Vulić, Dr. Jianqiao Lu, Dr. Yinya Huang, and Dr. Zhengying Liu.

I am always open for a chat or collaboration. Feel free to drop me an email!

- LLM Reasoning

- Long-Form Generation Evaluation

- Autoformalization for Theorem Proving

- Post-Training RL

University of California, Los Angeles (PhD), 2025-current

University of Cambridge (MPhill), 2022-2023

University of Macau (BA), 2017-2021

Featured Publications

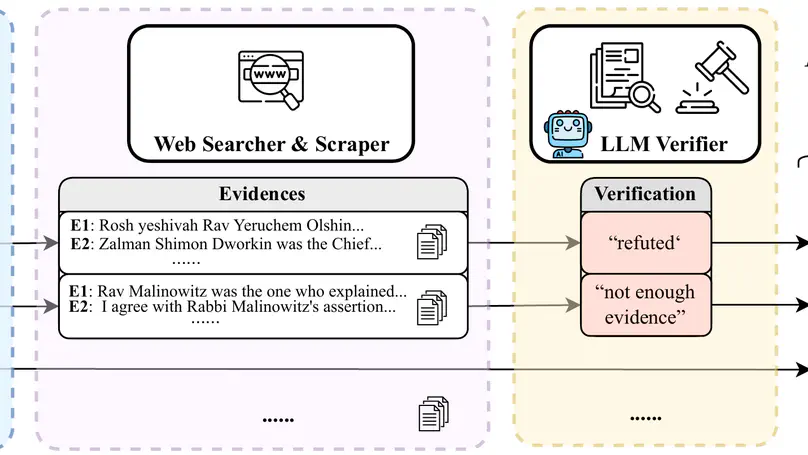

FaStfact is a multi-agent pipeline for evaluating long-form generation factuality that achieves the highest alignment with human evaluation and time/token efficiency among existing baselines. An annotated FaStfact-bench is also open-sourced.

SATBench is a benchmark for evaluating LLMs logical reasoning through logical puzzles derived from Boolean satisfiability (SAT) problems.

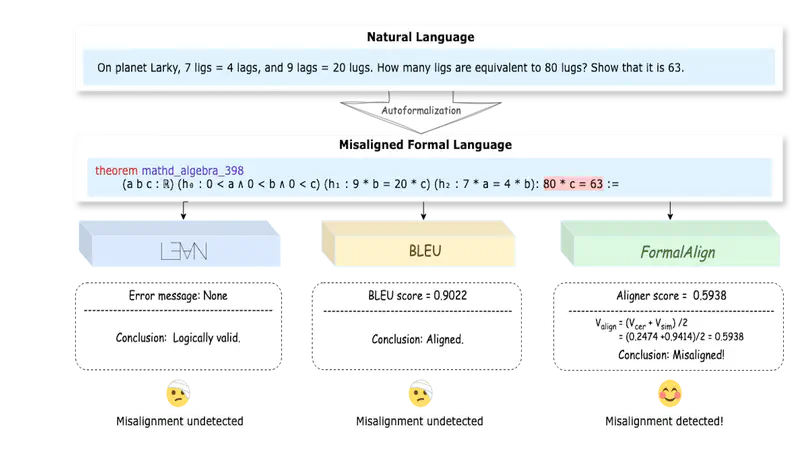

FormalAlign aims to address a long-lasting challenge in autoformalization - the absence of an effective alignment evaluation metric. It accurately assesses the semantic and logical alignment between the natural language and formal theorems.

MR-BEN is a comprehensive process-based benchmark to evaluate advanced `meta-reasoning’ skills, where models are asked to locate and analyse errors in the provided CoT solutions. It comprises 5,975 multi-domain samples with annotated groundtruths.

Research Experience

Certs & Awards

Contact

- yingjiawan.alisa[at]gmail.com

- Los Angeles, California 90024